- Date & Time: Tuesday, December 14, 2021; 1:00 PM EST

Speaker: Prof. Chris Fletcher, University of Waterloo

Research Areas: Dynamical Systems, Machine Learning, Multi-Physical Modeling

Abstract  Decision-making and adaptation to climate change requires quantitative projections of the physical climate system and an accurate understanding of the uncertainty in those projections. Earth system models (ESMs), which solve the Navier-Stokes equations on the sphere, are the only tool that climate scientists have to make projections forward into climate states that have not been observed in the historical data record. Yet, ESMs are incredibly complex and expensive codes and contain many poorly constrained physical parameters—for processes such as clouds and convection—that must be calibrated against observations. In this talk, I will describe research from my group that uses ensembles of ESM simulations to train statistical models that learn the behavior and sensitivities of the ESM. Once trained and validated the statistical models are essentially free to run, which allows climate modelling centers to make more efficient use of precious compute cycles. The aim is to improve the quality of future climate projections, by producing better calibrated ESMs, and to improve the quantification of the uncertainties, by better sampling the equifinality of climate states.

Decision-making and adaptation to climate change requires quantitative projections of the physical climate system and an accurate understanding of the uncertainty in those projections. Earth system models (ESMs), which solve the Navier-Stokes equations on the sphere, are the only tool that climate scientists have to make projections forward into climate states that have not been observed in the historical data record. Yet, ESMs are incredibly complex and expensive codes and contain many poorly constrained physical parameters—for processes such as clouds and convection—that must be calibrated against observations. In this talk, I will describe research from my group that uses ensembles of ESM simulations to train statistical models that learn the behavior and sensitivities of the ESM. Once trained and validated the statistical models are essentially free to run, which allows climate modelling centers to make more efficient use of precious compute cycles. The aim is to improve the quality of future climate projections, by producing better calibrated ESMs, and to improve the quantification of the uncertainties, by better sampling the equifinality of climate states.

-

- Date & Time: Tuesday, December 7, 2021; 1:00 PM EST

Speaker: Prof. Eric Severson, University of Wisconsin-Madison

MERL Host: Bingnan Wang

Research Area: Electric Systems

Abstract  Electric motors pump our water, heat and cool our homes and offices, drive critical medical and surgical equipment, and, increasingly, operate our transportation systems. Approximately 99% of the world’s electric energy is produced by a rotating generator and 45% of that energy is consumed by an electric motor. The efficiency of this technology is vital in enabling our energy sustainability and reducing our carbon footprint. The reliability and lifetime of this technology have severe, and sometimes life-altering, consequences. Today’s motor technology largely relies upon mechanical bearings to support the motor’s shaft. These bearings are the first components to fail, create frictional losses, and rely on lubricants that create contamination challenges and require periodic maintenance. In short, bearings are the Achilles' heel of modern electric motors.

Electric motors pump our water, heat and cool our homes and offices, drive critical medical and surgical equipment, and, increasingly, operate our transportation systems. Approximately 99% of the world’s electric energy is produced by a rotating generator and 45% of that energy is consumed by an electric motor. The efficiency of this technology is vital in enabling our energy sustainability and reducing our carbon footprint. The reliability and lifetime of this technology have severe, and sometimes life-altering, consequences. Today’s motor technology largely relies upon mechanical bearings to support the motor’s shaft. These bearings are the first components to fail, create frictional losses, and rely on lubricants that create contamination challenges and require periodic maintenance. In short, bearings are the Achilles' heel of modern electric motors.

This seminar will explore the use of actively controlled magnetic forces to levitate the motor shaft, eliminating mechanical bearings and the problems associated with them. The working principles of traditional magnetic levitation technology (active magnetic bearings) will be reviewed and used to explain why this technology has not been successfully applied to the most high-impact motor applications. Research into “bearingless” motors offers a new levitation approach by manipulating the inherent magnetic force capability of all electric motors. While traditional motors are carefully designed to prevent shaft forces, the bearingless motor concept controls these forces to make the motor simultaneously function as an active magnetic bearing. The seminar will showcase the potential of bearingless technology to revolutionize motor systems of critical importance for energy and sustainability—from industrial compressors and blowers, such as those found in HVAC systems and wastewater aeration equipment, to power grid flywheel energy storage devices and electric turbochargers in fuel-efficient vehicles.

-

- Date & Time: Tuesday, November 16, 2021; 11:00 AM EST

Speaker: Thomas Schön, Uppsala University

Research Areas: Dynamical Systems, Machine Learning

Abstract  While deep learning-based classification is generally addressed using standardized approaches, this is really not the case when it comes to the study of regression problems. There are currently several different approaches used for regression and there is still room for innovation. We have developed a general deep regression method with a clear probabilistic interpretation. The basic building block in our construction is an energy-based model of the conditional output density p(y|x), where we use a deep neural network to predict the un-normalized density from input-output pairs (x, y). Such a construction is also commonly referred to as an implicit representation. The resulting learning problem is challenging and we offer some insights on how to deal with it. We show good performance on several computer vision regression tasks, system identification problems and 3D object detection using laser data.

While deep learning-based classification is generally addressed using standardized approaches, this is really not the case when it comes to the study of regression problems. There are currently several different approaches used for regression and there is still room for innovation. We have developed a general deep regression method with a clear probabilistic interpretation. The basic building block in our construction is an energy-based model of the conditional output density p(y|x), where we use a deep neural network to predict the un-normalized density from input-output pairs (x, y). Such a construction is also commonly referred to as an implicit representation. The resulting learning problem is challenging and we offer some insights on how to deal with it. We show good performance on several computer vision regression tasks, system identification problems and 3D object detection using laser data.

-

- Date & Time: Tuesday, November 9, 2021; 1:00 PM EST

Speaker: Prof. Marco Di Renzo, CNRS & Paris-Saclay University

Research Areas: Communications, Electronic and Photonic Devices, Signal Processing

Abstract  A Reconfigurable Intelligent Surface (RIS) is a planar structure that is engineered to have properties that enable the dynamic control of the electromagnetic waves. In wireless communications and networks, RISs are an emerging technology for realizing programmable and reconfigurable wireless propagation environments through nearly passive and tunable signal transformations. RIS-assisted programmable wireless environments are a multidisciplinary research endeavor. This presentation is aimed to report the latest research advances on modeling, analyzing, and optimizing RISs for wireless communications with focus on electromagnetically consistent models, analytical frameworks, and optimization algorithms.

A Reconfigurable Intelligent Surface (RIS) is a planar structure that is engineered to have properties that enable the dynamic control of the electromagnetic waves. In wireless communications and networks, RISs are an emerging technology for realizing programmable and reconfigurable wireless propagation environments through nearly passive and tunable signal transformations. RIS-assisted programmable wireless environments are a multidisciplinary research endeavor. This presentation is aimed to report the latest research advances on modeling, analyzing, and optimizing RISs for wireless communications with focus on electromagnetically consistent models, analytical frameworks, and optimization algorithms.

-

- Date & Time: Tuesday, November 2, 2021; 1:00 PM EST

Speaker: Dr. Hsiao-Yu (Fish) Tung, MIT BCS

Research Areas: Artificial Intelligence, Computer Vision, Machine Learning, Robotics

Abstract  Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans.

Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans.

-

- Date & Time: Tuesday, October 12, 2021; 1:00 PM EST

Speaker: Prof. Greg Ongie, Marquette University

MERL Host: Hassan Mansour

Research Areas: Computational Sensing, Machine Learning, Signal Processing

Abstract  Deep learning is emerging as powerful tool to solve challenging inverse problems in computational imaging, including basic image restoration tasks like denoising and deblurring, as well as image reconstruction problems in medical imaging. This talk will give an overview of the state-of-the-art supervised learning techniques in this area and discuss two recent innovations: deep equilibrium architectures, which allows one to train an effectively infinite-depth reconstruction network; and model adaptation methods, that allow one to adapt a pre-trained reconstruction network to changes in the imaging forward model at test time.

Deep learning is emerging as powerful tool to solve challenging inverse problems in computational imaging, including basic image restoration tasks like denoising and deblurring, as well as image reconstruction problems in medical imaging. This talk will give an overview of the state-of-the-art supervised learning techniques in this area and discuss two recent innovations: deep equilibrium architectures, which allows one to train an effectively infinite-depth reconstruction network; and model adaptation methods, that allow one to adapt a pre-trained reconstruction network to changes in the imaging forward model at test time.

-

- Date & Time: Tuesday, September 28, 2021; 1:00 PM EST

Speaker: Dr. Ruohan Gao, Stanford University

MERL Host: Gordon Wichern

Research Areas: Computer Vision, Machine Learning, Speech & Audio

Abstract  While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

-

- Date & Time: Tuesday, September 14, 2021; 1:00 PM EST

Speaker: Prof. David Bergman, University of Connecticut

MERL Host: Arvind Raghunathan

Research Areas: Data Analytics, Machine Learning, Optimization

Abstract  The integration of machine learning and optimization opens the door to new modeling paradigms that have already proven successful across a broad range of industries. Sports betting is a particularly exciting application area, where recent advances in both analytics and optimization can provide a lucrative edge. In this talk we will discuss three algorithmic sports betting games where combinations of machine learning and optimization have netted me significant winnings.

The integration of machine learning and optimization opens the door to new modeling paradigms that have already proven successful across a broad range of industries. Sports betting is a particularly exciting application area, where recent advances in both analytics and optimization can provide a lucrative edge. In this talk we will discuss three algorithmic sports betting games where combinations of machine learning and optimization have netted me significant winnings.

-

- Date & Time: Tuesday, February 16, 2021; 11:00-12:00

Speaker: Prof. Pere Gilabert, Universitat Politecnica de Catalunya, Barcelona, Spain

Research Areas: Communications, Electronic and Photonic Devices, Machine Learning, Signal Processing

Abstract - Digital predistortion (DPD) linearization is the most common and spread solution to cope with power amplifiers (PA) inherent linearity versus efficiency trade-off. The use of new radio 5G spectrally efficient signals with high peak-to-average power ratios (PAPR) occupying wider bandwidths only aggravates such compromise. When considering wide bandwidth signals, carrier aggregation or multi-band configurations in high efficient transmitter architectures, such as Doherty PAs, load-modulated balanced amplifiers, envelope tracking PAs or outphasing transmitters, the number of parameters required in the DPD model to compensate for both nonlinearities and memory effects can be unacceptably high. This has a negative impact in the DPD model extraction/adaptation, because it increases the computational complexity and drives to over-fitting and uncertainty.

This talk will discuss the use of machine learning techniques for DPD linearization. The use of artificial neural networks (ANNs) for adaptive DPD linearization and approaches to reduce the coefficients adaptation time will be discussed. In addition, an overview on several feature-extraction techniques used to reduce the number of parameters of the DPD linearization system as well as to ensure proper, well-conditioned estimation for related variables will be presented.

-

- Date & Time: Tuesday, August 25, 2020; 11:00 AM

Speaker: Prof. James Hwang, Cornell University

Research Areas: Applied Physics, Electronic and Photonic Devices

Abstract  Microwave is not just for cooking, smart cars, or mobile phones. We can take advantage of the wide electromagnetic spectrum to do wonderful things that are more vital to our lives. For example, microwave ablation of cancer tumor is already in wide use, and microwave remote monitoring of vital signs is becoming more important as the population ages. This talk will focus on a biomedical use of microwave at the single-cell level. At low power, microwave can readily penetrate a cell membrane to interrogate what is inside a cell, without cooking it or otherwise hurting it. It is currently the fastest, most compact, and least costly way to tell whether a cell is alive or dead. On the other hand, at higher power but lower frequency, the electromagnetic signal can interact strongly with the cell membrane to drill temporary holes of nanometer size. The nanopores allow drugs to diffuse into the cell and, based on the reaction of the cell, individualized medicine can be developed and drug development can be sped up in general. Conversely, the nanopores allow strands of DNA molecules to be pulled out of the cell without killing it, which can speed up genetic engineering. Lastly, by changing both the power and frequency of the signal, we can have either positive or negative dielectrophoresis effects, which we have used to coerce a live cell to the examination table of Dr. Microwave, then usher it out after examination. These interesting uses of microwave and the resulted fundamental knowledge about biological cells will be explored in the talk.

Microwave is not just for cooking, smart cars, or mobile phones. We can take advantage of the wide electromagnetic spectrum to do wonderful things that are more vital to our lives. For example, microwave ablation of cancer tumor is already in wide use, and microwave remote monitoring of vital signs is becoming more important as the population ages. This talk will focus on a biomedical use of microwave at the single-cell level. At low power, microwave can readily penetrate a cell membrane to interrogate what is inside a cell, without cooking it or otherwise hurting it. It is currently the fastest, most compact, and least costly way to tell whether a cell is alive or dead. On the other hand, at higher power but lower frequency, the electromagnetic signal can interact strongly with the cell membrane to drill temporary holes of nanometer size. The nanopores allow drugs to diffuse into the cell and, based on the reaction of the cell, individualized medicine can be developed and drug development can be sped up in general. Conversely, the nanopores allow strands of DNA molecules to be pulled out of the cell without killing it, which can speed up genetic engineering. Lastly, by changing both the power and frequency of the signal, we can have either positive or negative dielectrophoresis effects, which we have used to coerce a live cell to the examination table of Dr. Microwave, then usher it out after examination. These interesting uses of microwave and the resulted fundamental knowledge about biological cells will be explored in the talk.

-

- Date & Time: Tuesday, July 14, 2020; 11:00 AM

Speaker: Hanrui Wang, MIT

Research Areas: Electronic and Photonic Devices, Machine Learning

Abstract - Automatic transistor sizing is a challenging problem in circuit design due to the large design space, complex performance trade-offs, and fast technological advancements. Although there has been plenty of work on transistor sizing targeting on one circuit, limited research has been done on transferring the knowledge from one circuit to another to reduce the re-design overhead. In this work, we present GCN-RL Circuit Designer, leveraging reinforcement learning (RL) to transfer the knowledge between different technology nodes and topologies. Moreover, inspired by the simple fact that circuit is a graph, we learn on the circuit topology representation with graph convolutional neural networks (GCN). The GCN-RL agent extracts features of the topology graph whose vertices are transistors, edges are wires. Our learning-based optimization consistently achieves the highest Figures of Merit (FoM) on four different circuits compared with conventional black-box optimization methods (Bayesian Optimization, Evolutionary Algorithms), random search, and human expert designs. Experiments on transfer learning between five technology nodes and two circuit topologies demonstrate that RL with transfer learning can achieve much higher FoMs than methods without knowledge transfer. Our transferable optimization method makes transistor sizing and design porting more effective and efficient. The work is accepted to DAC 2020.

-

- Date & Time: Thursday, May 7, 2020; 11:00 AM

Speaker: Prof. Petar Popovski, Aalborg University, Denmark

MERL Host: Toshiaki Koike-Akino

Research Areas: Artificial Intelligence, Communications, Machine Learning, Signal Processing, Information Security

Abstract  The wireless landscape evolves towards supporting a large population of connections for humans and machines with very diverse features and requirements. Perhaps the main motivation of 5G wireless systems is its flexibility to support heterogeneous connectivity requirements: enhanced mobile broadband (eMBB), massive machine-type communications (mMTC), and ultra-reliable low-latency communications (URLLC). However, this classification is rather limited and is currently undergoing a revision within the research community. The first part of this talk will discuss how this heterogeneity can be revised and which opportunities it opens with respect to spectrum usage. The second part of the talk will deal with performance guarantees of wireless services and, specifically, ultra-reliable communication and outline the importance of machine learning in that context. The final part of the talk will provide a broader view on the evolution of wireless connectivity, including aspects that are implied by the resistance to the deployment of 5G, but also the new opportunities that can transform the way we build and utilize connected systems.

The wireless landscape evolves towards supporting a large population of connections for humans and machines with very diverse features and requirements. Perhaps the main motivation of 5G wireless systems is its flexibility to support heterogeneous connectivity requirements: enhanced mobile broadband (eMBB), massive machine-type communications (mMTC), and ultra-reliable low-latency communications (URLLC). However, this classification is rather limited and is currently undergoing a revision within the research community. The first part of this talk will discuss how this heterogeneity can be revised and which opportunities it opens with respect to spectrum usage. The second part of the talk will deal with performance guarantees of wireless services and, specifically, ultra-reliable communication and outline the importance of machine learning in that context. The final part of the talk will provide a broader view on the evolution of wireless connectivity, including aspects that are implied by the resistance to the deployment of 5G, but also the new opportunities that can transform the way we build and utilize connected systems.

-

- Date & Time: Thursday, May 7, 2020; 12:00 PM

Speaker: Christopher Rackauckas, MIT

MERL Host: Christopher R. Laughman

Research Areas: Machine Learning, Multi-Physical Modeling, Optimization

Abstract - In the context of science, the well-known adage "a picture is worth a thousand words" might well be "a model is worth a thousand datasets." Scientific models, such as Newtonian physics or biological gene regulatory networks, are human-driven simplifications of complex phenomena that serve as surrogates for the countless experiments that validated the models. Recently, machine learning has been able to overcome the inaccuracies of approximate modeling by directly learning the entire set of nonlinear interactions from data. However, without any predetermined structure from the scientific basis behind the problem, machine learning approaches are flexible but data-expensive, requiring large databases of homogeneous labeled training data. A central challenge is reco nciling data that is at odds with simplified models without requiring "big data". In this talk we discuss a new methodology, universal differential equations (UDEs), which augment scientific models with machine-learnable structures for scientifically-based learning. We show how UDEs can be utilized to discover previously unknown governing equations, accurately extrapolate beyond the original data, and accelerate model simulation, all in a time and data-efficient manner. This advance is coupled with open-source software that allows for training UDEs which incorporate physical constraints, delayed interactions, implicitly-defined events, and intrinsic stochasticity in the model. Our examples show how a diverse set of computationally-difficult modeling issues across scientific disciplines, from automatically discovering biological mechanisms to accelerating climate simulations by 15,000x, can be handled by training UDEs.

-

- Date & Time: Tuesday, July 16, 2019; 12:00 PM

Speaker: Prof. Jeff Linderoth, University of Wisconsin-Madison

MERL Host: Arvind Raghunathan

Research Areas: Machine Learning, Optimization

Abstract  Algorithms to solve mixed integer linear programs have made incredible progress in the past 20 years. Key to these advances has been a mathematical analysis of the structure of the set of feasible solutions. We argue that a similar analysis is required in the case of mixed integer quadratic programs, like those that arise in sparse optimization in machine learning. One such analysis leads to the so-called perspective relaxation, which significantly improves solution performance on separable instances. Extensions of the perspective reformulation can lead to algorithms that are equivalent to some of the most popular, modern, sparsity-inducing non-convex regularizations in variable selection. Based on joint work with Hongbo Dong (Washington State Univ. ), Oktay Gunluk (IBM), and Kun Chen (Univ. Connecticut).

Algorithms to solve mixed integer linear programs have made incredible progress in the past 20 years. Key to these advances has been a mathematical analysis of the structure of the set of feasible solutions. We argue that a similar analysis is required in the case of mixed integer quadratic programs, like those that arise in sparse optimization in machine learning. One such analysis leads to the so-called perspective relaxation, which significantly improves solution performance on separable instances. Extensions of the perspective reformulation can lead to algorithms that are equivalent to some of the most popular, modern, sparsity-inducing non-convex regularizations in variable selection. Based on joint work with Hongbo Dong (Washington State Univ. ), Oktay Gunluk (IBM), and Kun Chen (Univ. Connecticut).

-

- Date & Time: Thursday, February 14, 2019; 1:30 -3:00 PM

Speaker: Avishai Weiss, MERL

MERL Hosts: Stefano Di Cairano; Avishai Weiss

Research Area: Control

Abstract - Avishai Weiss from MERL's Control and Dynamical Systems group will give a talk at Stanford's Aeronautics and Astronautics department titled: "Low-Thrust GEO Satellite Station Keeping, Attitude Control, and Momentum Management via Model Predictive Control". Electric propulsion for satellites is much more fuel efficient than conventional methods. The talk will describe MERL's solution to the satellite control problems deriving from the low thrust provided by electric propulsion.

-

- Date & Time: Tuesday, March 6, 2018; 12:00 PM

Speaker: Scott Wisdom, Affectiva

MERL Host: Jonathan Le Roux

Research Area: Speech & Audio

Abstract  Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

In particular, I will show how RNNs with rectified linear units and residual connections are a particular deep unfolding of a sequential version of the iterative shrinkage-thresholding algorithm (ISTA), a simple and classic algorithm for solving L1-regularized least-squares. This equivalence allows interpretation of state-of-the-art unitary RNNs (uRNNs) as an unfolded sparse coding algorithm. I will also describe a new type of RNN architecture called deep recurrent nonnegative matrix factorization (DR-NMF). DR-NMF is an unfolding of a sparse NMF model of nonnegative spectrograms for audio source separation. Both of these networks outperform conventional LSTM networks while also providing interpretability for practitioners.

-

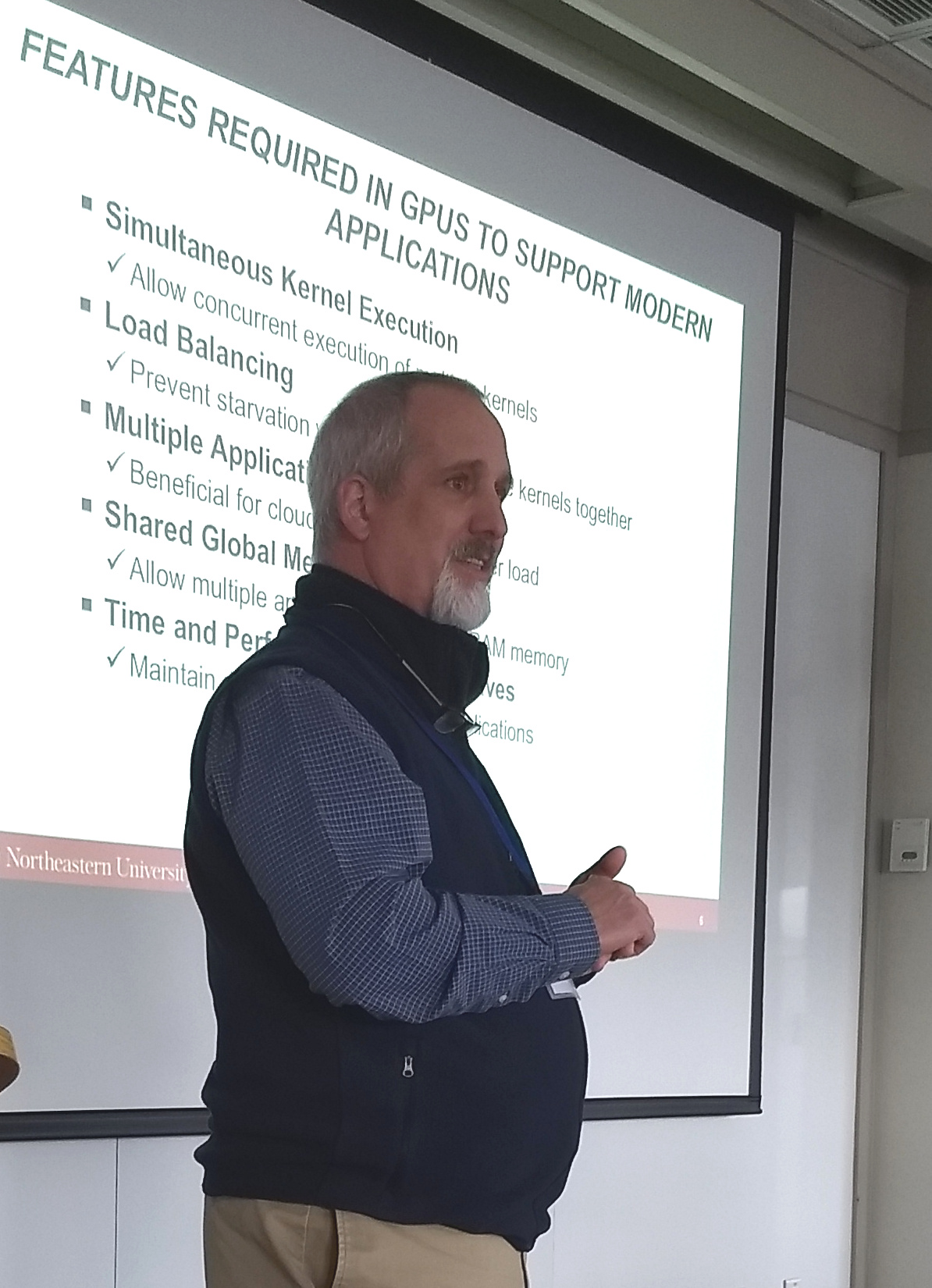

- Date & Time: Friday, February 2, 2018; 12:00

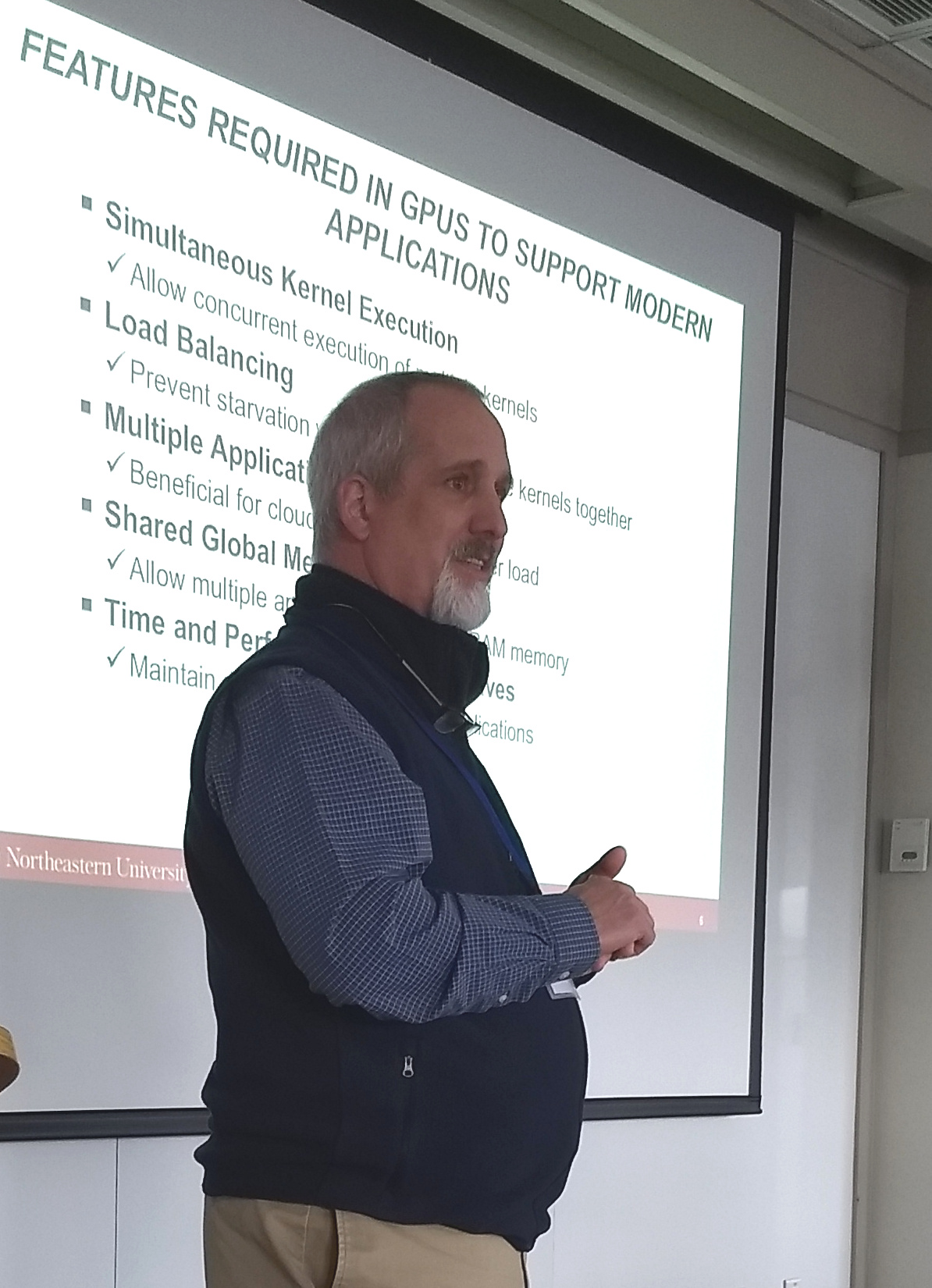

Speaker: Dr. David Kaeli, Northeastern University

MERL Host: Abraham Goldsmith

Research Areas: Control, Optimization, Machine Learning, Speech & Audio

Abstract  GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

-

- Date & Time: Wednesday, February 1, 2017; 12:00-13:00

Speaker: Dr. Heiga ZEN, Google

MERL Host: Chiori Hori

Research Area: Speech & Audio

Abstract  Recent progress in generative modeling has improved the naturalness of synthesized speech significantly. In this talk I will summarize these generative model-based approaches for speech synthesis such as WaveNet, a deep generative model of raw audio waveforms. We show that WaveNets are able to generate speech which mimics any human voice and which sounds more natural than the best existing Text-to-Speech systems.

Recent progress in generative modeling has improved the naturalness of synthesized speech significantly. In this talk I will summarize these generative model-based approaches for speech synthesis such as WaveNet, a deep generative model of raw audio waveforms. We show that WaveNets are able to generate speech which mimics any human voice and which sounds more natural than the best existing Text-to-Speech systems.

See https://deepmind.com/blog/wavenet-generative-model-raw-audio/ for further details.

-

- Date & Time: Tuesday, December 13, 2016; Noon

Speaker: Yue M. Lu, John A. Paulson School of Engineering and Applied Sciences, Harvard University

MERL Host: Petros T. Boufounos

Research Areas: Computational Sensing, Machine Learning

Abstract  In this talk, we will present a framework for analyzing, in the high-dimensional limit, the exact dynamics of several stochastic optimization algorithms that arise in signal and information processing. For concreteness, we consider two prototypical problems: sparse principal component analysis and regularized linear regression (e.g. LASSO). For each case, we show that the time-varying estimates given by the algorithms will converge weakly to a deterministic "limiting process" in the high-dimensional limit. Moreover, this limiting process can be characterized as the unique solution of a nonlinear PDE, and it provides exact information regarding the asymptotic performance of the algorithms. For example, performance metrics such as the MSE, the cosine similarity and the misclassification rate in sparse support recovery can all be obtained by examining the deterministic limiting process. A steady-state analysis of the nonlinear PDE also reveals interesting phase transition phenomena related to the performance of the algorithms. Although our analysis is asymptotic in nature, numerical simulations show that the theoretical predictions are accurate for moderate signal dimensions.

In this talk, we will present a framework for analyzing, in the high-dimensional limit, the exact dynamics of several stochastic optimization algorithms that arise in signal and information processing. For concreteness, we consider two prototypical problems: sparse principal component analysis and regularized linear regression (e.g. LASSO). For each case, we show that the time-varying estimates given by the algorithms will converge weakly to a deterministic "limiting process" in the high-dimensional limit. Moreover, this limiting process can be characterized as the unique solution of a nonlinear PDE, and it provides exact information regarding the asymptotic performance of the algorithms. For example, performance metrics such as the MSE, the cosine similarity and the misclassification rate in sparse support recovery can all be obtained by examining the deterministic limiting process. A steady-state analysis of the nonlinear PDE also reveals interesting phase transition phenomena related to the performance of the algorithms. Although our analysis is asymptotic in nature, numerical simulations show that the theoretical predictions are accurate for moderate signal dimensions.

-

- Date & Time: Monday, December 12, 2016; 12:00 PM

Speaker: Yanlai Chen, Department of Mathematics at the University of Massachusetts Dartmouth

Research Areas: Control, Dynamical Systems

Abstract - Models of reduced computational complexity is indispensable in scenarios where a large number of numerical solutions to a parametrized problem are desired in a fast/real-time fashion. These include simulation-based design, parameter optimization, optimal control, multi-model/scale analysis, uncertainty quantification. Thanks to an offline-online procedure and the recognition that the parameter-induced solution manifolds can be well approximated by finite-dimensional spaces, reduced basis method (RBM) and reduced collocation method (RCM) can improve efficiency by several orders of magnitudes. The accuracy of the RBM solution is maintained through a rigorous a posteriori error estimator whose efficient development is critical and involves fast eigensolves.

In this talk, I will give a brief introduction of the RBM/RCM, and explain how they can be used for data compression, face recognition, and significantly delaying the curse of dimensionality for uncertainty quantification.

-

- Date & Time: Friday, December 2, 2016; 11:00 AM

Speaker: Prof. Waheed Bajwa, Rutgers University

MERL Host: Petros T. Boufounos

Research Area: Computational Sensing

Abstract  While distributed information processing has a rich history, relatively less attention has been paid to the problem of collaborative learning of nonlinear geometric structures underlying data distributed across sites that are connected to each other in an arbitrary topology. In this talk, we discuss this problem in the context of collaborative dictionary learning from big, distributed data. It is assumed that a number of geographically-distributed, interconnected sites have massive local data and they are interested in collaboratively learning a low-dimensional geometric structure underlying these data. In contrast to some of the previous works on subspace-based data representations, we focus on the geometric structure of a union of subspaces (UoS). In this regard, we propose a distributed algorithm, termed cloud K-SVD, for collaborative learning of a UoS structure underlying distributed data of interest. The goal of cloud K-SVD is to learn an overcomplete dictionary at each individual site such that every sample in the distributed data can be represented through a small number of atoms of the learned dictionary. Cloud K-SVD accomplishes this goal without requiring communication of individual data samples between different sites. In this talk, we also theoretically characterize deviations of the dictionaries learned at individual sites by cloud K-SVD from a centralized solution. Finally, we numerically illustrate the efficacy of cloud K-SVD in the context of supervised training of nonlinear classsifiers from distributed, labaled training data.

While distributed information processing has a rich history, relatively less attention has been paid to the problem of collaborative learning of nonlinear geometric structures underlying data distributed across sites that are connected to each other in an arbitrary topology. In this talk, we discuss this problem in the context of collaborative dictionary learning from big, distributed data. It is assumed that a number of geographically-distributed, interconnected sites have massive local data and they are interested in collaboratively learning a low-dimensional geometric structure underlying these data. In contrast to some of the previous works on subspace-based data representations, we focus on the geometric structure of a union of subspaces (UoS). In this regard, we propose a distributed algorithm, termed cloud K-SVD, for collaborative learning of a UoS structure underlying distributed data of interest. The goal of cloud K-SVD is to learn an overcomplete dictionary at each individual site such that every sample in the distributed data can be represented through a small number of atoms of the learned dictionary. Cloud K-SVD accomplishes this goal without requiring communication of individual data samples between different sites. In this talk, we also theoretically characterize deviations of the dictionaries learned at individual sites by cloud K-SVD from a centralized solution. Finally, we numerically illustrate the efficacy of cloud K-SVD in the context of supervised training of nonlinear classsifiers from distributed, labaled training data.

-

- Date & Time: Friday, September 23, 2016; 12:00 PM- 1:00 PM

Speaker: Dr. Earl McCune, Eridan Communications

Research Areas: Communications, Signal Processing

Abstract  To maximize the operating energy efficiency of any wireless communication link requires a global optimization not only across the entire block diagram, but also including the selected signal modulation and aspects of the link operating protocol. Achieving this global optimization is first examined for the transmitter, receiver, and baseband circuitry. Then the important aspects of signal modulation necessary to access these circuit optimizations, with examples, are presented, followed by the correspondingly important protocol aspects needed. A metric called modulation-available energy efficiency (MAEE) compares proposed signals for compatibility with high energy efficiency objectives.

To maximize the operating energy efficiency of any wireless communication link requires a global optimization not only across the entire block diagram, but also including the selected signal modulation and aspects of the link operating protocol. Achieving this global optimization is first examined for the transmitter, receiver, and baseband circuitry. Then the important aspects of signal modulation necessary to access these circuit optimizations, with examples, are presented, followed by the correspondingly important protocol aspects needed. A metric called modulation-available energy efficiency (MAEE) compares proposed signals for compatibility with high energy efficiency objectives.

-

- Date & Time: Wednesday, August 17, 2016; 1 PM

Speaker: Gilles Zerah, Centre Francais en Calcul Atomique et Moleculaire-Ile-de-France (CFCAM-IdF)

Research Areas: Applied Physics, Electronic and Photonic Devices

Abstract - The first part of the talk is a high-level review of modern technologies for atomic-level modelling of materials. The second part discusses band gap calculations and MERL results for semi-conductors.

-

- Date & Time: Wednesday, July 13, 2016; 2:30 PM - 3:30

Speaker: Richard Lehoucq, Sandia National Laboratories

Research Areas: Computer Vision, Digital Video, Machine Learning

Abstract  My presentation considers the research question of whether existing algorithms and software for the large-scale sparse eigenvalue problem can be applied to problems in spectral graph theory. I first provide an introduction to several problems involving spectral graph theory. I then provide a review of several different algorithms for the large-scale eigenvalue problem and briefly introduce the Anasazi package of eigensolvers.

My presentation considers the research question of whether existing algorithms and software for the large-scale sparse eigenvalue problem can be applied to problems in spectral graph theory. I first provide an introduction to several problems involving spectral graph theory. I then provide a review of several different algorithms for the large-scale eigenvalue problem and briefly introduce the Anasazi package of eigensolvers.

-

- Date & Time: Thursday, July 7, 2016; 2:00 PM

Speaker: Dr. Sonja Glavaski, Program Director, ARPA-E

MERL Host: Arvind Raghunathan

Research Area: Electric Systems

Abstract  The evolution of the grid faces significant challenges if it is to integrate and accept more energy from renewable generation and other Distributed Energy Resources (DERs). To maintain grid's reliability and turn intermittent power sources into major contributors to the U.S. energy mix, we have to think about the grid differently and design it to be smarter and more flexible.

The evolution of the grid faces significant challenges if it is to integrate and accept more energy from renewable generation and other Distributed Energy Resources (DERs). To maintain grid's reliability and turn intermittent power sources into major contributors to the U.S. energy mix, we have to think about the grid differently and design it to be smarter and more flexible.

ARPA-E is interested in disruptive technologies that enable increased integration of DERs by real-time adaptation while maintaining grid reliability and reducing cost for customers with smart technologies. The potential impact is significant, with projected annual energy savings of more than 3 quadrillion BTU and annual CO2 emissions reductions of more than 250 million metric tons.

This talk will identify opportunities in developing next generation control technologies and grid operation paradigms that address these challenges and enable secure, stable, and reliable transmission and distribution of electrical power. Summary of newly announced ARPA-E NODES (Network Optimized Distributed Energy Systems) Program funding development of these technologies will be presented.

-

Decision-making and adaptation to climate change requires quantitative projections of the physical climate system and an accurate understanding of the uncertainty in those projections. Earth system models (ESMs), which solve the Navier-Stokes equations on the sphere, are the only tool that climate scientists have to make projections forward into climate states that have not been observed in the historical data record. Yet, ESMs are incredibly complex and expensive codes and contain many poorly constrained physical parameters—for processes such as clouds and convection—that must be calibrated against observations. In this talk, I will describe research from my group that uses ensembles of ESM simulations to train statistical models that learn the behavior and sensitivities of the ESM. Once trained and validated the statistical models are essentially free to run, which allows climate modelling centers to make more efficient use of precious compute cycles. The aim is to improve the quality of future climate projections, by producing better calibrated ESMs, and to improve the quantification of the uncertainties, by better sampling the equifinality of climate states.

Decision-making and adaptation to climate change requires quantitative projections of the physical climate system and an accurate understanding of the uncertainty in those projections. Earth system models (ESMs), which solve the Navier-Stokes equations on the sphere, are the only tool that climate scientists have to make projections forward into climate states that have not been observed in the historical data record. Yet, ESMs are incredibly complex and expensive codes and contain many poorly constrained physical parameters—for processes such as clouds and convection—that must be calibrated against observations. In this talk, I will describe research from my group that uses ensembles of ESM simulations to train statistical models that learn the behavior and sensitivities of the ESM. Once trained and validated the statistical models are essentially free to run, which allows climate modelling centers to make more efficient use of precious compute cycles. The aim is to improve the quality of future climate projections, by producing better calibrated ESMs, and to improve the quantification of the uncertainties, by better sampling the equifinality of climate states. Electric motors pump our water, heat and cool our homes and offices, drive critical medical and surgical equipment, and, increasingly, operate our transportation systems. Approximately 99% of the world’s electric energy is produced by a rotating generator and 45% of that energy is consumed by an electric motor. The efficiency of this technology is vital in enabling our energy sustainability and reducing our carbon footprint. The reliability and lifetime of this technology have severe, and sometimes life-altering, consequences. Today’s motor technology largely relies upon mechanical bearings to support the motor’s shaft. These bearings are the first components to fail, create frictional losses, and rely on lubricants that create contamination challenges and require periodic maintenance. In short, bearings are the Achilles' heel of modern electric motors.

Electric motors pump our water, heat and cool our homes and offices, drive critical medical and surgical equipment, and, increasingly, operate our transportation systems. Approximately 99% of the world’s electric energy is produced by a rotating generator and 45% of that energy is consumed by an electric motor. The efficiency of this technology is vital in enabling our energy sustainability and reducing our carbon footprint. The reliability and lifetime of this technology have severe, and sometimes life-altering, consequences. Today’s motor technology largely relies upon mechanical bearings to support the motor’s shaft. These bearings are the first components to fail, create frictional losses, and rely on lubricants that create contamination challenges and require periodic maintenance. In short, bearings are the Achilles' heel of modern electric motors.  While deep learning-based classification is generally addressed using standardized approaches, this is really not the case when it comes to the study of regression problems. There are currently several different approaches used for regression and there is still room for innovation. We have developed a general deep regression method with a clear probabilistic interpretation. The basic building block in our construction is an energy-based model of the conditional output density p(y|x), where we use a deep neural network to predict the un-normalized density from input-output pairs (x, y). Such a construction is also commonly referred to as an implicit representation. The resulting learning problem is challenging and we offer some insights on how to deal with it. We show good performance on several computer vision regression tasks, system identification problems and 3D object detection using laser data.

While deep learning-based classification is generally addressed using standardized approaches, this is really not the case when it comes to the study of regression problems. There are currently several different approaches used for regression and there is still room for innovation. We have developed a general deep regression method with a clear probabilistic interpretation. The basic building block in our construction is an energy-based model of the conditional output density p(y|x), where we use a deep neural network to predict the un-normalized density from input-output pairs (x, y). Such a construction is also commonly referred to as an implicit representation. The resulting learning problem is challenging and we offer some insights on how to deal with it. We show good performance on several computer vision regression tasks, system identification problems and 3D object detection using laser data. A Reconfigurable Intelligent Surface (RIS) is a planar structure that is engineered to have properties that enable the dynamic control of the electromagnetic waves. In wireless communications and networks, RISs are an emerging technology for realizing programmable and reconfigurable wireless propagation environments through nearly passive and tunable signal transformations. RIS-assisted programmable wireless environments are a multidisciplinary research endeavor. This presentation is aimed to report the latest research advances on modeling, analyzing, and optimizing RISs for wireless communications with focus on electromagnetically consistent models, analytical frameworks, and optimization algorithms.

A Reconfigurable Intelligent Surface (RIS) is a planar structure that is engineered to have properties that enable the dynamic control of the electromagnetic waves. In wireless communications and networks, RISs are an emerging technology for realizing programmable and reconfigurable wireless propagation environments through nearly passive and tunable signal transformations. RIS-assisted programmable wireless environments are a multidisciplinary research endeavor. This presentation is aimed to report the latest research advances on modeling, analyzing, and optimizing RISs for wireless communications with focus on electromagnetically consistent models, analytical frameworks, and optimization algorithms. Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans.

Current state-of-the-art CNNs can localize and name objects in internet photos, yet, they miss the basic knowledge that a two-year-old toddler has possessed: objects persist over time despite changes in the observer’s viewpoint or during cross-object occlusions; objects have 3D extent; solid objects do not pass through each other. In this talk, I will introduce neural architectures that learn to parse video streams of a static scene into world-centric 3D feature maps by disentangling camera motion from scene appearance. I will show the proposed architectures learn object permanence, can imagine RGB views from novel viewpoints in truly novel scenes, can conduct basic spatial reasoning and planning, can infer affordability in sentences, and can learn geometry-aware 3D concepts that allow pose-aware object recognition to happen with weak/sparse labels. Our experiments suggest that the proposed architectures are essential for the models to generalize across objects and locations, and it overcomes many limitations of 2D CNNs. I will show how we can use the proposed 3D representations to build machine perception and physical understanding more close to humans. Deep learning is emerging as powerful tool to solve challenging inverse problems in computational imaging, including basic image restoration tasks like denoising and deblurring, as well as image reconstruction problems in medical imaging. This talk will give an overview of the state-of-the-art supervised learning techniques in this area and discuss two recent innovations: deep equilibrium architectures, which allows one to train an effectively infinite-depth reconstruction network; and model adaptation methods, that allow one to adapt a pre-trained reconstruction network to changes in the imaging forward model at test time.

Deep learning is emerging as powerful tool to solve challenging inverse problems in computational imaging, including basic image restoration tasks like denoising and deblurring, as well as image reconstruction problems in medical imaging. This talk will give an overview of the state-of-the-art supervised learning techniques in this area and discuss two recent innovations: deep equilibrium architectures, which allows one to train an effectively infinite-depth reconstruction network; and model adaptation methods, that allow one to adapt a pre-trained reconstruction network to changes in the imaging forward model at test time. While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics.

While computer vision has made significant progress by "looking" — detecting objects, actions, or people based on their appearance — it often does not listen. Yet cognitive science tells us that perception develops by making use of all our senses without intensive supervision. Towards this goal, in this talk I will present my research on audio-visual learning — We disentangle object sounds from unlabeled video, use audio as an efficient preview for action recognition in untrimmed video, decode the monaural soundtrack into its binaural counterpart by injecting visual spatial information, and use echoes to interact with the environment for spatial image representation learning. Together, these are steps towards multimodal understanding of the visual world, where audio serves as both the semantic and spatial signals. In the end, I will also briefly talk about our latest work on multisensory learning for robotics. The integration of machine learning and optimization opens the door to new modeling paradigms that have already proven successful across a broad range of industries. Sports betting is a particularly exciting application area, where recent advances in both analytics and optimization can provide a lucrative edge. In this talk we will discuss three algorithmic sports betting games where combinations of machine learning and optimization have netted me significant winnings.

The integration of machine learning and optimization opens the door to new modeling paradigms that have already proven successful across a broad range of industries. Sports betting is a particularly exciting application area, where recent advances in both analytics and optimization can provide a lucrative edge. In this talk we will discuss three algorithmic sports betting games where combinations of machine learning and optimization have netted me significant winnings. Microwave is not just for cooking, smart cars, or mobile phones. We can take advantage of the wide electromagnetic spectrum to do wonderful things that are more vital to our lives. For example, microwave ablation of cancer tumor is already in wide use, and microwave remote monitoring of vital signs is becoming more important as the population ages. This talk will focus on a biomedical use of microwave at the single-cell level. At low power, microwave can readily penetrate a cell membrane to interrogate what is inside a cell, without cooking it or otherwise hurting it. It is currently the fastest, most compact, and least costly way to tell whether a cell is alive or dead. On the other hand, at higher power but lower frequency, the electromagnetic signal can interact strongly with the cell membrane to drill temporary holes of nanometer size. The nanopores allow drugs to diffuse into the cell and, based on the reaction of the cell, individualized medicine can be developed and drug development can be sped up in general. Conversely, the nanopores allow strands of DNA molecules to be pulled out of the cell without killing it, which can speed up genetic engineering. Lastly, by changing both the power and frequency of the signal, we can have either positive or negative dielectrophoresis effects, which we have used to coerce a live cell to the examination table of Dr. Microwave, then usher it out after examination. These interesting uses of microwave and the resulted fundamental knowledge about biological cells will be explored in the talk.

Microwave is not just for cooking, smart cars, or mobile phones. We can take advantage of the wide electromagnetic spectrum to do wonderful things that are more vital to our lives. For example, microwave ablation of cancer tumor is already in wide use, and microwave remote monitoring of vital signs is becoming more important as the population ages. This talk will focus on a biomedical use of microwave at the single-cell level. At low power, microwave can readily penetrate a cell membrane to interrogate what is inside a cell, without cooking it or otherwise hurting it. It is currently the fastest, most compact, and least costly way to tell whether a cell is alive or dead. On the other hand, at higher power but lower frequency, the electromagnetic signal can interact strongly with the cell membrane to drill temporary holes of nanometer size. The nanopores allow drugs to diffuse into the cell and, based on the reaction of the cell, individualized medicine can be developed and drug development can be sped up in general. Conversely, the nanopores allow strands of DNA molecules to be pulled out of the cell without killing it, which can speed up genetic engineering. Lastly, by changing both the power and frequency of the signal, we can have either positive or negative dielectrophoresis effects, which we have used to coerce a live cell to the examination table of Dr. Microwave, then usher it out after examination. These interesting uses of microwave and the resulted fundamental knowledge about biological cells will be explored in the talk. The wireless landscape evolves towards supporting a large population of connections for humans and machines with very diverse features and requirements. Perhaps the main motivation of 5G wireless systems is its flexibility to support heterogeneous connectivity requirements: enhanced mobile broadband (eMBB), massive machine-type communications (mMTC), and ultra-reliable low-latency communications (URLLC). However, this classification is rather limited and is currently undergoing a revision within the research community. The first part of this talk will discuss how this heterogeneity can be revised and which opportunities it opens with respect to spectrum usage. The second part of the talk will deal with performance guarantees of wireless services and, specifically, ultra-reliable communication and outline the importance of machine learning in that context. The final part of the talk will provide a broader view on the evolution of wireless connectivity, including aspects that are implied by the resistance to the deployment of 5G, but also the new opportunities that can transform the way we build and utilize connected systems.

The wireless landscape evolves towards supporting a large population of connections for humans and machines with very diverse features and requirements. Perhaps the main motivation of 5G wireless systems is its flexibility to support heterogeneous connectivity requirements: enhanced mobile broadband (eMBB), massive machine-type communications (mMTC), and ultra-reliable low-latency communications (URLLC). However, this classification is rather limited and is currently undergoing a revision within the research community. The first part of this talk will discuss how this heterogeneity can be revised and which opportunities it opens with respect to spectrum usage. The second part of the talk will deal with performance guarantees of wireless services and, specifically, ultra-reliable communication and outline the importance of machine learning in that context. The final part of the talk will provide a broader view on the evolution of wireless connectivity, including aspects that are implied by the resistance to the deployment of 5G, but also the new opportunities that can transform the way we build and utilize connected systems. Algorithms to solve mixed integer linear programs have made incredible progress in the past 20 years. Key to these advances has been a mathematical analysis of the structure of the set of feasible solutions. We argue that a similar analysis is required in the case of mixed integer quadratic programs, like those that arise in sparse optimization in machine learning. One such analysis leads to the so-called perspective relaxation, which significantly improves solution performance on separable instances. Extensions of the perspective reformulation can lead to algorithms that are equivalent to some of the most popular, modern, sparsity-inducing non-convex regularizations in variable selection. Based on joint work with Hongbo Dong (Washington State Univ. ), Oktay Gunluk (IBM), and Kun Chen (Univ. Connecticut).

Algorithms to solve mixed integer linear programs have made incredible progress in the past 20 years. Key to these advances has been a mathematical analysis of the structure of the set of feasible solutions. We argue that a similar analysis is required in the case of mixed integer quadratic programs, like those that arise in sparse optimization in machine learning. One such analysis leads to the so-called perspective relaxation, which significantly improves solution performance on separable instances. Extensions of the perspective reformulation can lead to algorithms that are equivalent to some of the most popular, modern, sparsity-inducing non-convex regularizations in variable selection. Based on joint work with Hongbo Dong (Washington State Univ. ), Oktay Gunluk (IBM), and Kun Chen (Univ. Connecticut). Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn.

Recurrent neural networks (RNNs) are effective, data-driven models for sequential data, such as audio and speech signals. However, like many deep networks, RNNs are essentially black boxes; though they are effective, their weights and architecture are not directly interpretable by practitioners. A major component of my dissertation research is explaining the success of RNNs and constructing new RNN architectures through the process of "deep unfolding," which can construct and explain deep network architectures using an equivalence to inference in statistical models. Deep unfolding yields principled initializations for training deep networks, provides insight into their effectiveness, and assists with interpretation of what these networks learn. GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model.

GPU computing is alive and well! The GPU has allowed researchers to overcome a number of computational barriers in important problem domains. But still, there remain challenges to use a GPU to target more general purpose applications. GPUs achieve impressive speedups when compared to CPUs, since GPUs have a large number of compute cores and high memory bandwidth. Recent GPU performance is approaching 10 teraflops of single precision performance on a single device. In this talk we will discuss current trends with GPUs, including some advanced features that allow them exploit multi-context grains of parallelism. Further, we consider how GPUs can be treated as cloud-based resources, enabling a GPU-enabled server to deliver HPC cloud services by leveraging virtualization and collaborative filtering. Finally, we argue for for new heterogeneous workloads and discuss the role of the Heterogeneous Systems Architecture (HSA), a standard that further supports integration of the CPU and GPU into a common framework. We present a new class of benchmarks specifically tailored to evaluate the benefits of features supported in the new HSA programming model. Recent progress in generative modeling has improved the naturalness of synthesized speech significantly. In this talk I will summarize these generative model-based approaches for speech synthesis such as WaveNet, a deep generative model of raw audio waveforms. We show that WaveNets are able to generate speech which mimics any human voice and which sounds more natural than the best existing Text-to-Speech systems.

Recent progress in generative modeling has improved the naturalness of synthesized speech significantly. In this talk I will summarize these generative model-based approaches for speech synthesis such as WaveNet, a deep generative model of raw audio waveforms. We show that WaveNets are able to generate speech which mimics any human voice and which sounds more natural than the best existing Text-to-Speech systems. In this talk, we will present a framework for analyzing, in the high-dimensional limit, the exact dynamics of several stochastic optimization algorithms that arise in signal and information processing. For concreteness, we consider two prototypical problems: sparse principal component analysis and regularized linear regression (e.g. LASSO). For each case, we show that the time-varying estimates given by the algorithms will converge weakly to a deterministic "limiting process" in the high-dimensional limit. Moreover, this limiting process can be characterized as the unique solution of a nonlinear PDE, and it provides exact information regarding the asymptotic performance of the algorithms. For example, performance metrics such as the MSE, the cosine similarity and the misclassification rate in sparse support recovery can all be obtained by examining the deterministic limiting process. A steady-state analysis of the nonlinear PDE also reveals interesting phase transition phenomena related to the performance of the algorithms. Although our analysis is asymptotic in nature, numerical simulations show that the theoretical predictions are accurate for moderate signal dimensions.

In this talk, we will present a framework for analyzing, in the high-dimensional limit, the exact dynamics of several stochastic optimization algorithms that arise in signal and information processing. For concreteness, we consider two prototypical problems: sparse principal component analysis and regularized linear regression (e.g. LASSO). For each case, we show that the time-varying estimates given by the algorithms will converge weakly to a deterministic "limiting process" in the high-dimensional limit. Moreover, this limiting process can be characterized as the unique solution of a nonlinear PDE, and it provides exact information regarding the asymptotic performance of the algorithms. For example, performance metrics such as the MSE, the cosine similarity and the misclassification rate in sparse support recovery can all be obtained by examining the deterministic limiting process. A steady-state analysis of the nonlinear PDE also reveals interesting phase transition phenomena related to the performance of the algorithms. Although our analysis is asymptotic in nature, numerical simulations show that the theoretical predictions are accurate for moderate signal dimensions. While distributed information processing has a rich history, relatively less attention has been paid to the problem of collaborative learning of nonlinear geometric structures underlying data distributed across sites that are connected to each other in an arbitrary topology. In this talk, we discuss this problem in the context of collaborative dictionary learning from big, distributed data. It is assumed that a number of geographically-distributed, interconnected sites have massive local data and they are interested in collaboratively learning a low-dimensional geometric structure underlying these data. In contrast to some of the previous works on subspace-based data representations, we focus on the geometric structure of a union of subspaces (UoS). In this regard, we propose a distributed algorithm, termed cloud K-SVD, for collaborative learning of a UoS structure underlying distributed data of interest. The goal of cloud K-SVD is to learn an overcomplete dictionary at each individual site such that every sample in the distributed data can be represented through a small number of atoms of the learned dictionary. Cloud K-SVD accomplishes this goal without requiring communication of individual data samples between different sites. In this talk, we also theoretically characterize deviations of the dictionaries learned at individual sites by cloud K-SVD from a centralized solution. Finally, we numerically illustrate the efficacy of cloud K-SVD in the context of supervised training of nonlinear classsifiers from distributed, labaled training data.