PS-NeuS: A Probability-guided Sampler for Neural Implicit Surface Rendering

European Conference on Computer Vision (ECCV 2024)

MERL Researchers: Pedro Miraldo, Moitreya Chatterjee (Computer Vision).

Joint work with: G. Dias Pais, Valter Piedade (Instituto Superior Tecnico, Lisboa)

Several variants of Neural Radiance Fields (NeRFs) have significantly improved the accuracy of image synthesis and surface reconstruction of 3D scenes/objects. A key characteristic of these methods is that they cannot afford to train the neural network using every possible input, specifically, every pixel and every 3D point along each pixel's projection ray. While vanilla NeRFs uniformly sample both the image pixels and the 3D points along the projection rays, some variants guide the sampling of the 3D points along the projection rays. In this paper, we propose a guided sampling of both the image pixels and the 3D points along the rays. We leverage the implicit surface representation of the foreground scene to model a probability density function in a 3D image projection space. Additionally, we propose a new surface reconstruction loss that fully explores the proposed 3D image projection space model and incorporates near-to-surface and empty-space locations. By integrating our novel sampling strategy and novel loss into two different state-of-the-art neural implicit surface renderers, we achieve more accurate and detailed 3D reconstructions and improved image rendering, especially for the regions of interest in a scene.

Method

The scene is represented as a 3D grid and characterized by a Probability Density Function (PDF) computed from the Signed Distance Function (SDF) network. We propose to use a 3D orthographic image plus depth as the 3D grid in Interpolation. Then, we consider the camera viewpoint of the scene (such as occlusions) in View Dependency. In the shown grids, blue hue maps to the probability value, normalized for each grid. A higher blue saturation is more probable. At every training step, points are sampled to create red ray samples in Probabilistic Sampling. In addition we perform uniform sampling (green points) for overall image quality and scene exploration.

Results

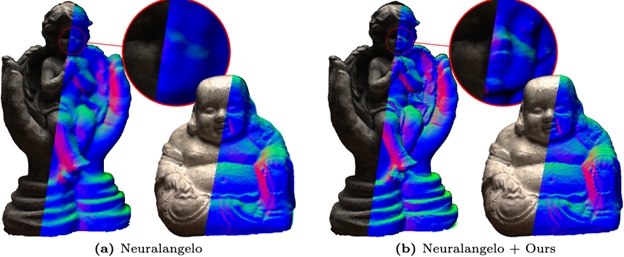

Our sampler extracts more detailed meshes. Ours synthesized normal images are less noisy, with sharper edges in both scenes, leading to better reconstruction. Image quality remains similar. While some baselines fail to capture the foreground surfaces, our proposed sampling obtains a reasonable 3D structure and high-quality images.