Attention-Based Multimodal Fusion for Video Description

A novel neural network architecture that fuses multimodal information using a modality-dependent attention mechanism.

MERL Researchers: Chiori Hori, Tim K. Marks.

Search MERL publications by keyword: Computer Vision, Speech & Audio, Video Description.

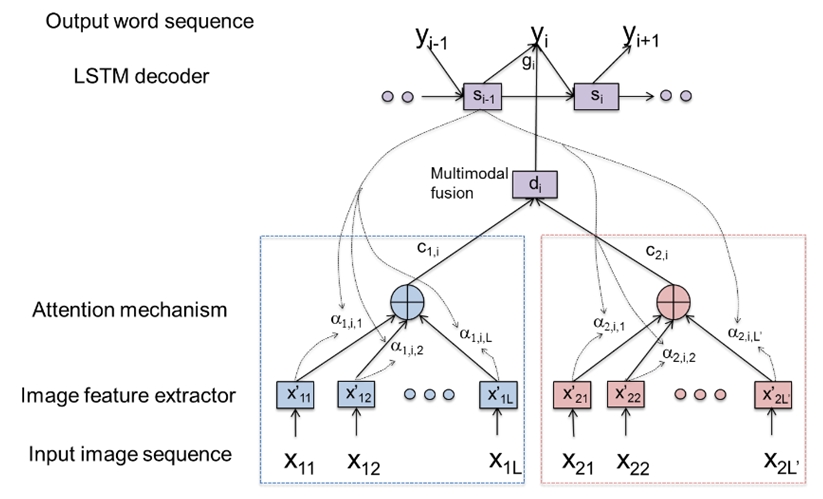

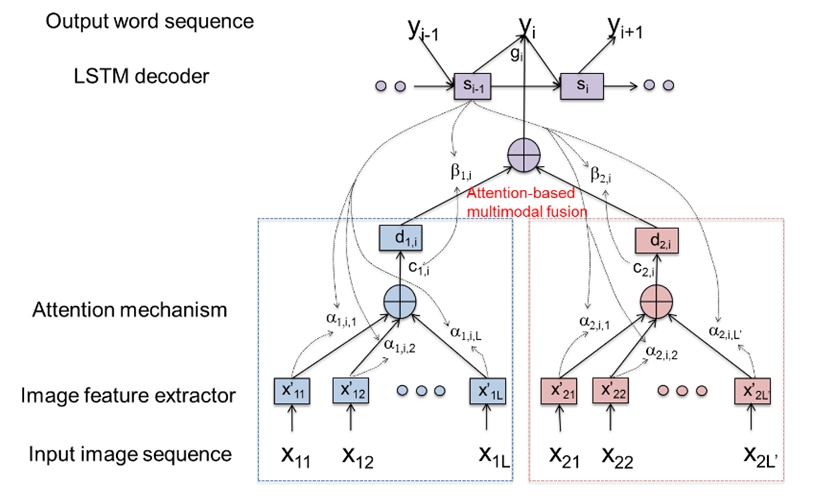

Understanding scenes through sensed information is a fundamental challenge for man machine interface. We aim to develop methods for learning semantic representations from multimodal information, including both visual and audio data, as the basis for intelligent communications and interface with machines. Towards this goal, we invented a modality-dependent attention mechanism for video captioning based on encoder-decoder sentence generation using recurrent neural networks (RNNs).

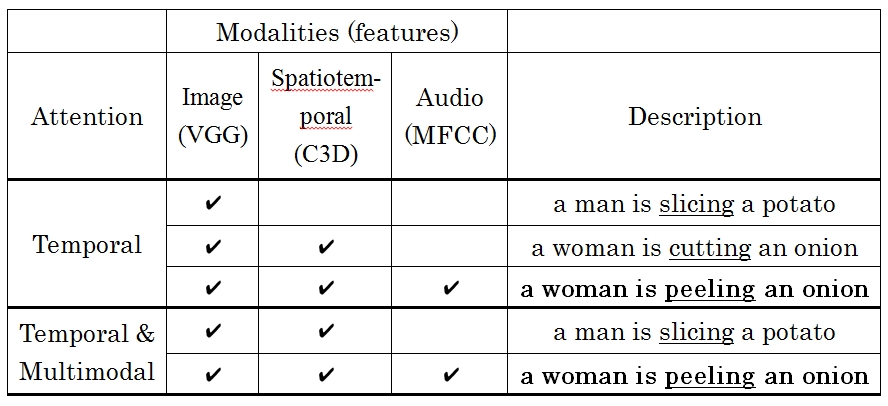

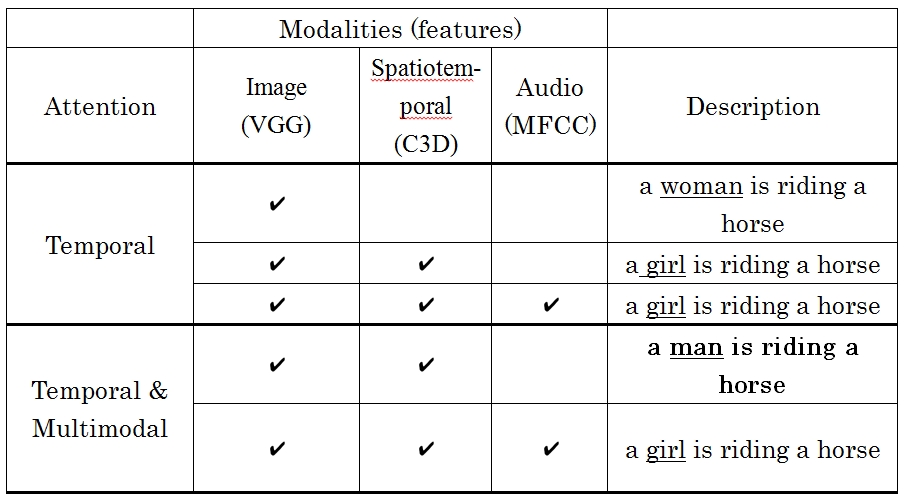

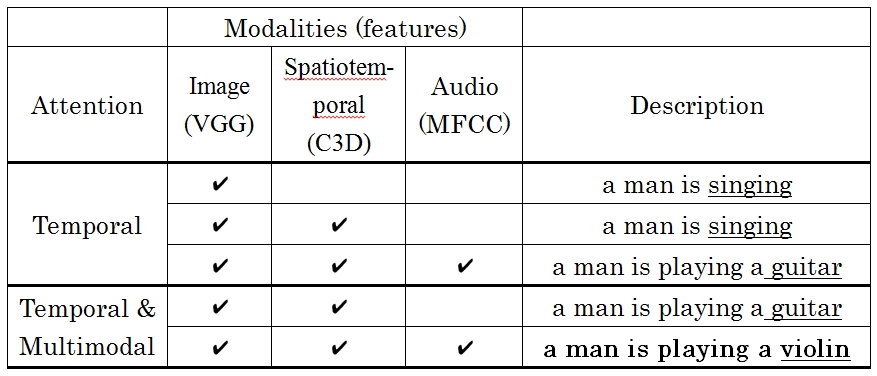

Our method provides an effective way to fuse multimodal information where the attention model not only selectively attends to specific times (temporal attention) or spatial regions (spatial attention) as done in conventional schemes, but also to specific input modalities such as image features, motion features, and audio features; we refer to this as modal attention. We evaluated our method on the Youtube2Text dataset, achieving results that are competitive with current state of the art. The results show that our model incorporating multimodal attention as well as temporal attention outperforms the model that uses temporal attention alone.

MERL Publications

- , "Attention-Based Multimodal Fusion for Video Description", arXiv, January 2017.BibTeX arXiv

- @article{Hori2017jan,

- author = {Hori, Chiori and Hori, Takaaki and Lee, Teng-Yok and Sumi, Kazuhiko and Hershey, John R. and Marks, Tim K.},

- title = {{Attention-Based Multimodal Fusion for Video Description}},

- journal = {arXiv},

- year = 2017,

- month = jan,

- url = {https://arxiv.org/abs/1701.03126}

- }