TR2022-098

AutoTransfer: Subject Transfer Learning with Censored Representations on Biosignals Data

-

- , "AutoTransfer: Subject Transfer Learning with Censored Representations on Biosignals Data", International Conference of the IEEE Engineering in Medicine & Biology Society (EMBS), DOI: 10.1109/EMBC48229.2022.9871649, July 2022.BibTeX TR2022-098 PDF Software

- @inproceedings{Smedemark-Margulies2022jul,

- author = {Smedemark-Margulies, Niklas and Wang, Ye and Koike-Akino, Toshiaki and Erdogmus, Deniz},

- title = {{AutoTransfer: Subject Transfer Learning with Censored Representations on Biosignals Data}},

- booktitle = {International Conference of the IEEE Engineering in Medicine \& Biology Society (EMBS)},

- year = 2022,

- month = jul,

- publisher = {IEEE},

- doi = {10.1109/EMBC48229.2022.9871649},

- issn = {2694-0604},

- isbn = {978-1-7281-2782-8},

- url = {https://www.merl.com/publications/TR2022-098}

- }

- , "AutoTransfer: Subject Transfer Learning with Censored Representations on Biosignals Data", International Conference of the IEEE Engineering in Medicine & Biology Society (EMBS), DOI: 10.1109/EMBC48229.2022.9871649, July 2022.

-

MERL Contacts:

-

Research Areas:

Artificial Intelligence, Machine Learning, Signal Processing, Human-Computer Interaction

Abstract:

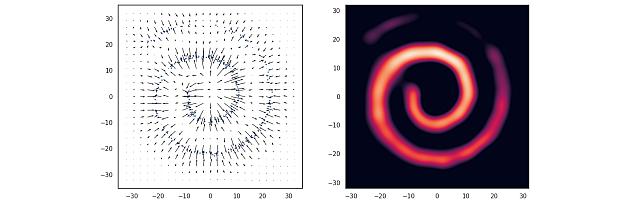

We investigate a regularization framework for subject transfer learning in which we train an encoder and classifier to minimize classification loss, subject to a penalty measuring independence between the latent representation and the subject label. We introduce three notions of independence and corresponding penalty terms using mutual information or divergence as a proxy for independence. For each penalty term, we provide several concrete estimation algorithms, using analytic methods as well as neural critic functions. We propose a hands-off strategy for applying this diverse family of regular- ization schemes to a new dataset, which we call “AutoTransfer”. We evaluate the performance of these individual regularization strategies under our AutoTransfer framework on EEG, EMG, and ECoG datasets, showing that these approaches can improve subject transfer learning for challenging real-world datasets