TR2018-042

FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation

-

- , "FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPR.2018.00029, June 2018.BibTeX TR2018-042 PDF Video Software

- @inproceedings{Yang2018jun,

- author = {Yang, Yaoqing and Feng, Chen and Shen, Yiru and Tian, Dong},

- title = {{FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation}},

- booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

- year = 2018,

- month = jun,

- doi = {10.1109/CVPR.2018.00029},

- url = {https://www.merl.com/publications/TR2018-042}

- }

- , "FoldingNet: Point Cloud Auto-encoder via Deep Grid Deformation", IEEE Conference on Computer Vision and Pattern Recognition (CVPR), DOI: 10.1109/CVPR.2018.00029, June 2018.

-

Research Areas:

Abstract:

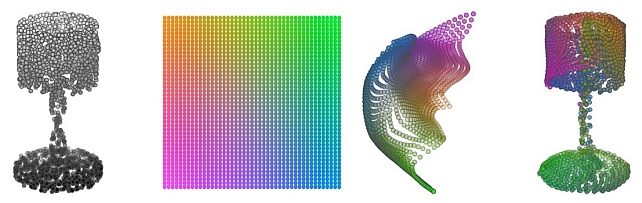

Recent deep networks that directly handle points in a point set, e.g., PointNet, have been state-of-the-art for supervised learning tasks on point clouds such as classification and segmentation. In this work, a novel end-to-end deep auto-encoder is proposed to address unsupervised learning challenges on point clouds. On the encoder side, a graph-based enhancement is enforced to promote local structures on top of PointNet. Then, a novel folding-based decoder deforms a canonical 2D grid onto the underlying 3D object surface of a point cloud, achieving low reconstruction errors even for objects with delicate structures. The proposed decoder only use about 7% parameters of a decoder with fully-connected neural networks, yet leads to a more discriminative representation that achieves higher linear SVM classification accuracy than the benchmark. In addition, the proposed decoder structure is shown, in theory, to be a generic architecture that is able to reconstruct an arbitrary point cloud from a 2D grid. Our code is available at http://www.merl.com/research/license#FoldingNet