TR2012-091

SLAM Using Both Points and Planes for Hand-Held 3D Sensors

-

- , "SLAM Using Both Points and Planes for Hand-Held 3D Sensors", International Symposium on Mixed and Augmented Reality (ISMAR), November 2012.BibTeX TR2012-091 PDF Software

- @inproceedings{Taguchi2012nov,

- author = {Taguchi, Y. and Jian, Y.-D. and Ramalingam, S. and Feng, C.},

- title = {{SLAM Using Both Points and Planes for Hand-Held 3D Sensors}},

- booktitle = {International Symposium on Mixed and Augmented Reality (ISMAR)},

- year = 2012,

- month = nov,

- url = {https://www.merl.com/publications/TR2012-091}

- }

- , "SLAM Using Both Points and Planes for Hand-Held 3D Sensors", International Symposium on Mixed and Augmented Reality (ISMAR), November 2012.

-

Research Area:

Abstract:

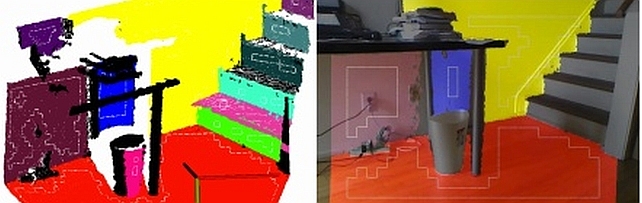

We present a simultaneous localization and mapping (SLAM) algorithm for a hand-held 3D sensor that uses both points and planes as primitives. Our algorithm uses any combination of three point/plane primitives (3 planes, 2 planes and 1 point, 1 plane and 2 points, and 3 points) in a RANSAC framework to efficiently compute the sensor pose. As the number of planes is significantly smaller than the number of points in typical 3D scenes, our RANSAC algorithm prefers primitive combinations involving more planes than points. In contrast to existing approaches that mainly use points for registration, our algorithm has the following advantages: (1) it enables faster correspondence search and registration due to the smaller number of plane primitives; (2) it produces plane based 3D models that are more compact than point-based ones; and (3) being a global registration algorithm, our approach does not suffer from local minima or any initialization problems. Our experiments demonstrate real-time, interactive 3D reconstruction of office spaces using a hand-held Kinect sensor.

Software & Data Downloads

Related News & Events

-

NEWS ISMAR 2012: publication by Yuichi Taguchi, Srikumar Ramalingam and others Date: November 5, 2012

Where: International Symposium on Mixed and Augmented Reality (ISMAR)

Research Area: Computer VisionBrief- The paper "SLAM Using Both Points and Planes for Hand-Held 3D Sensors" by Taguchi, Y., Jian, Y.-D., Ramalingam, S. and Feng, C. was presented at the International Symposium on Mixed and Augmented Reality (ISMAR).