TR2012-020

Depth Sensing Using Active Coherent Illumination

-

- , "Depth Sensing Using Active Coherent Illumination", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), DOI: 10.1109/ICASSP.2012.6289146, March 2012, pp. 5417-5420.BibTeX TR2012-020 PDF Video

- @inproceedings{Boufounos2012mar,

- author = {{{Boufounos, P.T.}}},

- title = {{{Depth Sensing Using Active Coherent Illumination}}},

- booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)},

- year = 2012,

- pages = {5417--5420},

- month = mar,

- doi = {10.1109/ICASSP.2012.6289146},

- issn = {1520-6149},

- isbn = {978-1-4673-0045-2},

- url = {https://www.merl.com/publications/TR2012-020}

- }

- , "Depth Sensing Using Active Coherent Illumination", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), DOI: 10.1109/ICASSP.2012.6289146, March 2012, pp. 5417-5420.

-

MERL Contact:

-

Research Area:

Abstract:

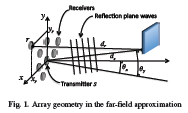

We examine the use of active coherent sensingan increasingly available technology for sensing the depth of scenes. A scene is a sparse signal but also exhibits significant structure which cannot be exploited using standard sparse recovery algorithms. Instead, inspired by the model-based compressive sensing literature we develop a scene model that incorporates occlusion constraints in recovering the depth map. Our model is computationally tractable; we develop a variation of the well-known model-based Compressive Sampling Matching Pursuit (CoSaMP) algorithm, and we demonstrate that our approach significantly improves reconstruction performance.

Related News & Events

-

NEWS IEEE-NH ComSig lecture by MERL's Petros Boufounos Date: April 4, 2019

Where: Nashua Public Library, Nashua, NH

MERL Contact: Petros T. Boufounos

Research Areas: Computational Sensing, Signal ProcessingBrief MERL's Petros Boufounos gave a lecture for the IEEE-NH ComSig chapter at the Nashua Public Library as part of the IEEE Signal Processing Society Distinguished Lecturer series.

MERL's Petros Boufounos gave a lecture for the IEEE-NH ComSig chapter at the Nashua Public Library as part of the IEEE Signal Processing Society Distinguished Lecturer series.

Title: "An Inverse Problem Framework for Array Processing Systems."

Abstract: Array-based sensing systems, such as ultrasonic, radar and optical (LIDAR) are becoming increasingly important in a variety of applications, including robotics, autonomous driving, medical imaging, and virtual reality, among others. This has led to continuous improvements in sensing hardware, but also to increasing demand for theory and methods to inform the system design and improve the processing. In this talk we will discuss how recent advances in formulating and solving inverse problems, such as compressed sensing, blind deconvolution, and sparse signal modeling can be applied to significantly reduce the cost and improve the capabilities of array-based and multichannel sensing systems. We show that these systems share a common mathematical framework, which allows us to describe both the acquisition hardware and the scene being acquired. Under this framework we can exploit prior knowledge on the scene, the system, and a variety of errors that might occur, allowing for significant improvements in the reconstruction accuracy. Furthermore, we can consider the design of the system itself in the context of the inverse problem, leading to designs that are more efficient, more accurate, or less expensive, depending on the application. In the talk we will explore applications of this model to LIDAR and depth sensing, radar and distributed radar, and ultrasonic sensing. In the context of these applications, we will describe how different models can lead to improved specifications in ultrasonic systems, robustness to position and timing errors in distributed array systems, and cost reduction and new capabilities in LIDAR systems.

-

NEWS ICASSP 2012: 8 publications by Petros T. Boufounos, Dehong Liu, John R. Hershey, Jonathan Le Roux and Zafer Sahinoglu Date: March 25, 2012

Where: IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

MERL Contacts: Dehong Liu; Jonathan Le Roux; Petros T. BoufounosBrief- The papers "Dictionary Learning Based Pan-Sharpening" by Liu, D. and Boufounos, P.T., "Multiple Dictionary Learning for Blocking Artifacts Reduction" by Wang, Y. and Porikli, F., "A Compressive Phase-Locked Loop" by Schnelle, S.R., Slavinsky, J.P., Boufounos, P.T., Davenport, M.A. and Baraniuk, R.G., "Indirect Model-based Speech Enhancement" by Le Roux, J. and Hershey, J.R., "A Clustering Approach to Optimize Online Dictionary Learning" by Rao, N. and Porikli, F., "Parametric Multichannel Adaptive Signal Detection: Exploiting Persymmetric Structure" by Wang, P., Sahinoglu, Z., Pun, M.-O. and Li, H., "Additive Noise Removal by Sparse Reconstruction on Image Affinity Nets" by Sundaresan, R. and Porikli, F. and "Depth Sensing Using Active Coherent Illumination" by Boufounos, P.T. were presented at the IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP).