Chiori Hori

- Phone: 617-621-7568

- Email:

-

Position:

Research / Technical Staff

Senior Principal Research Scientist -

Education:

Ph.D., Tokyo Institute of Technology, 2002 -

Research Areas:

- Artificial Intelligence

- Speech & Audio

- Computer Vision

- Machine Learning

- Robotics

- Human-Computer Interaction

- Signal Processing

External Links:

Chiori's Quick Links

-

Biography

Chiori has been a member of MERL's research team since 2015. Her work is focused on spoken dialog and audio visual scene-aware dialog technologies toward human-robot communications. She's on the editorial board of "Computer Speech and Language" and is a technical committee member of "Speech and Language Processing Group" of IEEE Signal Processing Society. Prior to joining MERL, Chiori spent 8 years at Japan's National Institute of Information and Communication Technology (NICT), where she held the position of Research Manager of the Spoken Language Communication Laboratory. She also spent time researching at Carnegie Mellon and the NTT Communication Science Laboratories, prior to NICT.

-

Recent News & Events

-

NEWS MERL at the International Conference on Robotics and Automation (ICRA) 2024 Date: May 13, 2024 - May 17, 2024

Where: Yokohama, Japan

MERL Contacts: Anoop Cherian; Radu Corcodel; Stefano Di Cairano; Chiori Hori; Siddarth Jain; Devesh K. Jha; Jonathan Le Roux; Diego Romeres; William S. Yerazunis

Research Areas: Artificial Intelligence, Machine Learning, Optimization, Robotics, Speech & AudioBrief- MERL made significant contributions to both the organization and the technical program of the International Conference on Robotics and Automation (ICRA) 2024, which was held in Yokohama, Japan from May 13th to May 17th.

MERL was a Bronze sponsor of the conference, and exhibited a live robotic demonstration, which attracted a large audience. The demonstration showcased an Autonomous Robotic Assembly technology executed on MELCO's Assista robot arm and was the collaborative effort of the Optimization and Robotics Team together with the Advanced Technology department at Mitsubishi Electric.

MERL researchers from the Optimization and Robotics, Speech & Audio, and Control for Autonomy teams also presented 8 papers and 2 invited talks covering topics on robotic assembly, applications of LLMs to robotics, human robot interaction, safe and robust path planning for autonomous drones, transfer learning, perception and tactile sensing.

- MERL made significant contributions to both the organization and the technical program of the International Conference on Robotics and Automation (ICRA) 2024, which was held in Yokohama, Japan from May 13th to May 17th.

-

EVENT MERL Contributes to ICASSP 2024 Date: Sunday, April 14, 2024 - Friday, April 19, 2024

Location: Seoul, South Korea

MERL Contacts: Petros T. Boufounos; François Germain; Chiori Hori; Toshiaki Koike-Akino; Jonathan Le Roux; Hassan Mansour; Kieran Parsons; Joshua Rapp; Anthony Vetro; Pu (Perry) Wang; Gordon Wichern

Research Areas: Artificial Intelligence, Computational Sensing, Machine Learning, Robotics, Signal Processing, Speech & AudioBrief- MERL has made numerous contributions to both the organization and technical program of ICASSP 2024, which is being held in Seoul, Korea from April 14-19, 2024.

Sponsorship and Awards

MERL is proud to be a Bronze Patron of the conference and will participate in the student job fair on Thursday, April 18. Please join this session to learn more about employment opportunities at MERL, including openings for research scientists, post-docs, and interns.

MERL is pleased to be the sponsor of two IEEE Awards that will be presented at the conference. We congratulate Prof. Stéphane G. Mallat, the recipient of the 2024 IEEE Fourier Award for Signal Processing, and Prof. Keiichi Tokuda, the recipient of the 2024 IEEE James L. Flanagan Speech and Audio Processing Award.

Jonathan Le Roux, MERL Speech and Audio Senior Team Leader, will also be recognized during the Awards Ceremony for his recent elevation to IEEE Fellow.

Technical Program

MERL will present 13 papers in the main conference on a wide range of topics including automated audio captioning, speech separation, audio generative models, speech and sound synthesis, spatial audio reproduction, multimodal indoor monitoring, radar imaging, depth estimation, physics-informed machine learning, and integrated sensing and communications (ISAC). Three workshop papers have also been accepted for presentation on audio-visual speaker diarization, music source separation, and music generative models.

Perry Wang is the co-organizer of the Workshop on Signal Processing and Machine Learning Advances in Automotive Radars (SPLAR), held on Sunday, April 14. It features keynote talks from leaders in both academia and industry, peer-reviewed workshop papers, and lightning talks from ICASSP regular tracks on signal processing and machine learning for automotive radar and, more generally, radar perception.

Gordon Wichern will present an invited keynote talk on analyzing and interpreting audio deep learning models at the Workshop on Explainable Machine Learning for Speech and Audio (XAI-SA), held on Monday, April 15. He will also appear in a panel discussion on interpretable audio AI at the workshop.

Perry Wang also co-organizes a two-part special session on Next-Generation Wi-Fi Sensing (SS-L9 and SS-L13) which will be held on Thursday afternoon, April 18. The special session includes papers on PHY-layer oriented signal processing and data-driven deep learning advances, and supports upcoming 802.11bf WLAN Sensing Standardization activities.

Petros Boufounos is participating as a mentor in ICASSP’s Micro-Mentoring Experience Program (MiME).

About ICASSP

ICASSP is the flagship conference of the IEEE Signal Processing Society, and the world's largest and most comprehensive technical conference focused on the research advances and latest technological development in signal and information processing. The event attracts more than 3000 participants.

- MERL has made numerous contributions to both the organization and technical program of ICASSP 2024, which is being held in Seoul, Korea from April 14-19, 2024.

See All News & Events for Chiori -

-

Awards

-

AWARD Honorable Mention Award at NeurIPS 23 Instruction Workshop Date: December 15, 2023

Awarded to: Lingfeng Sun, Devesh K. Jha, Chiori Hori, Siddharth Jain, Radu Corcodel, Xinghao Zhu, Masayoshi Tomizuka and Diego Romeres

MERL Contacts: Radu Corcodel; Chiori Hori; Siddarth Jain; Devesh K. Jha; Diego Romeres

Research Areas: Artificial Intelligence, Machine Learning, RoboticsBrief- MERL Researchers received an "Honorable Mention award" at the Workshop on Instruction Tuning and Instruction Following at the NeurIPS 2023 conference in New Orleans. The workshop was on the topic of instruction tuning and Instruction following for Large Language Models (LLMs). MERL researchers presented their work on interactive planning using LLMs for partially observable robotic tasks during the oral presentation session at the workshop.

-

AWARD MERL team wins the Audio-Visual Speech Enhancement (AVSE) 2023 Challenge Date: December 16, 2023

Awarded to: Zexu Pan, Gordon Wichern, Yoshiki Masuyama, Francois Germain, Sameer Khurana, Chiori Hori, and Jonathan Le Roux

MERL Contacts: François Germain; Chiori Hori; Jonathan Le Roux; Gordon Wichern; Yoshiki Masuyama

Research Areas: Artificial Intelligence, Machine Learning, Speech & AudioBrief- MERL's Speech & Audio team ranked 1st out of 12 teams in the 2nd COG-MHEAR Audio-Visual Speech Enhancement Challenge (AVSE). The team was led by Zexu Pan, and also included Gordon Wichern, Yoshiki Masuyama, Francois Germain, Sameer Khurana, Chiori Hori, and Jonathan Le Roux.

The AVSE challenge aims to design better speech enhancement systems by harnessing the visual aspects of speech (such as lip movements and gestures) in a manner similar to the brain’s multi-modal integration strategies. MERL’s system was a scenario-aware audio-visual TF-GridNet, that incorporates the face recording of a target speaker as a conditioning factor and also recognizes whether the predominant interference signal is speech or background noise. In addition to outperforming all competing systems in terms of objective metrics by a wide margin, in a listening test, MERL’s model achieved the best overall word intelligibility score of 84.54%, compared to 57.56% for the baseline and 80.41% for the next best team. The Fisher’s least significant difference (LSD) was 2.14%, indicating that our model offered statistically significant speech intelligibility improvements compared to all other systems.

- MERL's Speech & Audio team ranked 1st out of 12 teams in the 2nd COG-MHEAR Audio-Visual Speech Enhancement Challenge (AVSE). The team was led by Zexu Pan, and also included Gordon Wichern, Yoshiki Masuyama, Francois Germain, Sameer Khurana, Chiori Hori, and Jonathan Le Roux.

-

-

Research Highlights

-

MERL Publications

- , "Interactive Robot Action Replanning using Multimodal LLM Trained from Human Demonstration Videos", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), March 2025.BibTeX TR2025-034 PDF

- @inproceedings{Hori2025mar,

- author = {Hori, Chiori and Kambara, Motonari and Sugiura, Komei and Ota, Kei and Khurana, Sameer and Jain, Siddarth and Corcodel, Radu and Jha, Devesh K. and Romeres, Diego and {Le Roux}, Jonathan},

- title = {{Interactive Robot Action Replanning using Multimodal LLM Trained from Human Demonstration Videos}},

- booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)},

- year = 2025,

- month = mar,

- url = {https://www.merl.com/publications/TR2025-034}

- }

- , "ZeroST: Zero-Shot Speech Translation", Interspeech, DOI: 10.21437/Interspeech.2024-1088, September 2024, pp. 392-396.BibTeX TR2024-122 PDF

- @inproceedings{Khurana2024sep,

- author = {Khurana, Sameer and Hori, Chiori and Laurent, Antoine and Wichern, Gordon and {Le Roux}, Jonathan},

- title = {{ZeroST: Zero-Shot Speech Translation}},

- booktitle = {Interspeech},

- year = 2024,

- pages = {392--396},

- month = sep,

- doi = {10.21437/Interspeech.2024-1088},

- issn = {2958-1796},

- url = {https://www.merl.com/publications/TR2024-122}

- }

- , "Human Action Understanding-based Robot Planning using Multimodal LLM", IEEE International Conference on Robotics and Automation (ICRA), June 2024.BibTeX TR2024-066 PDF

- @inproceedings{Kambara2024jun,

- author = {Kambara, Motonari and Hori, Chiori and Sugiura, Komei and Ota, Kei and Jha, Devesh K. and Khurana, Sameer and Jain, Siddarth and Corcodel, Radu and Romeres, Diego and {Le Roux}, Jonathan},

- title = {{Human Action Understanding-based Robot Planning using Multimodal LLM}},

- booktitle = {IEEE International Conference on Robotics and Automation (ICRA) Workshop},

- year = 2024,

- month = jun,

- url = {https://www.merl.com/publications/TR2024-066}

- }

- , "Interactive Planning Using Large Language Models for Partially Observable Robotic Tasks", IEEE International Conference on Robotics and Automation (ICRA), DOI: 10.1109/ICRA57147.2024.10610981, May 2024, pp. 14054-14061.BibTeX TR2024-052 PDF Video

- @inproceedings{Sun2024may,

- author = {Sun, Lingfeng and Jha, Devesh K. and Hori, Chiori and Jain, Siddarth and Corcodel, Radu and Zhu, Xinghao and Tomizuka, Masayoshi and Romeres, Diego},

- title = {{Interactive Planning Using Large Language Models for Partially Observable Robotic Tasks}},

- booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

- year = 2024,

- pages = {14054--14061},

- month = may,

- publisher = {IEEE},

- doi = {10.1109/ICRA57147.2024.10610981},

- isbn = {979-8-3503-8457-4},

- url = {https://www.merl.com/publications/TR2024-052}

- }

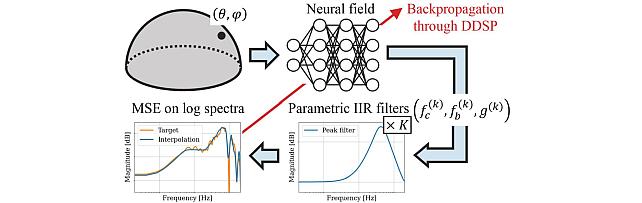

- , "NIIRF: Neural IIR Filter Field for HRTF Upsampling and Personalization", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), DOI: 10.1109/ICASSP48485.2024.10448477, March 2024, pp. 1016-1020.BibTeX TR2024-026 PDF Software

- @inproceedings{Masuyama2024mar,

- author = {Masuyama, Yoshiki and Wichern, Gordon and Germain, François G and Pan, Zexu and Khurana, Sameer and Hori, Chiori and {Le Roux}, Jonathan},

- title = {{NIIRF: Neural IIR Filter Field for HRTF Upsampling and Personalization}},

- booktitle = {IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP)},

- year = 2024,

- pages = {1016--1020},

- month = mar,

- doi = {10.1109/ICASSP48485.2024.10448477},

- url = {https://www.merl.com/publications/TR2024-026}

- }

- , "Interactive Robot Action Replanning using Multimodal LLM Trained from Human Demonstration Videos", IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), March 2025.

-

Software & Data Downloads

-

Videos

-

MERL Issued Patents

-

Title: "Long-context End-to-end Speech Recognition System"

Inventors: Hori, Takaaki; Moritz, Niko; Hori, Chiori; Le Roux, Jonathan

Patent No.: 11,978,435

Issue Date: May 7, 2024 -

Title: "System and Method for Using Human Relationship Structures for Email Classification"

Inventors: Harsham, Bret A.; Hori, Chiori

Patent No.: 11,651,222

Issue Date: May 16, 2023 -

Title: "Method and System for Scene-Aware Interaction"

Inventors: Hori, Chiori; Cherian, Anoop; Chen, Siheng; Marks, Tim; Le Roux, Jonathan; Hori, Takaaki; Harsham, Bret A.; Vetro, Anthony; Sullivan, Alan

Patent No.: 11,635,299

Issue Date: Apr 25, 2023 -

Title: "Scene-Aware Video Encoder System and Method"

Inventors: Cherian, Anoop; Hori, Chiori; Le Roux, Jonathan; Marks, Tim; Sullivan, Alan

Patent No.: 11,582,485

Issue Date: Feb 14, 2023 -

Title: "Low-latency Captioning System"

Inventors: Hori, Chiori; Hori, Takaaki; Cherian, Anoop; Marks, Tim; Le Roux, Jonathan

Patent No.: 11,445,267

Issue Date: Sep 13, 2022 -

Title: "System and Method for a Dialogue Response Generation System"

Inventors: Hori, Chiori; Cherian, Anoop; Marks, Tim; Hori, Takaaki

Patent No.: 11,264,009

Issue Date: Mar 1, 2022 -

Title: "Scene-Aware Video Dialog"

Inventors: Geng, Shijie; Gao, Peng; Cherian, Anoop; Hori, Chiori; Le Roux, Jonathan

Patent No.: 11,210,523

Issue Date: Dec 28, 2021 -

Title: "Method and System for Multi-Label Classification"

Inventors: Hori, Takaaki; Hori, Chiori; Watanabe, Shinji; Hershey, John R.; Harsham, Bret A.; Le Roux, Jonathan

Patent No.: 11,086,918

Issue Date: Aug 10, 2021 -

Title: "Position Estimation Under Multipath Transmission"

Inventors: Kim, Kyeong-Jin; Orlik, Philip V.; Hori, Chiori

Patent No.: 11,079,495

Issue Date: Aug 3, 2021 -

Title: "Method and System for Multi-Modal Fusion Model"

Inventors: Hori, Chiori; Hori, Takaaki; Hershey, John R.; Marks, Tim

Patent No.: 10,417,498

Issue Date: Sep 17, 2019 -

Title: "Method and System for Training Language Models to Reduce Recognition Errors"

Inventors: Hori, Takaaki; Hori, Chiori; Watanabe, Shinji; Hershey, John R.

Patent No.: 10,176,799

Issue Date: Jan 8, 2019 -

Title: "Method and System for Role Dependent Context Sensitive Spoken and Textual Language Understanding with Neural Networks"

Inventors: Hori, Chiori; Hori, Takaaki; Watanabe, Shinji; Hershey, John R.

Patent No.: 9,842,106

Issue Date: Dec 12, 2017

-

Title: "Long-context End-to-end Speech Recognition System"